Real-time analytics is changing the way we work with data. The days of generating dashboards in the morning based on data processed overnight are over. Now, we look to build real-time dashboards and embed analytics into user-facing products and features. In this arena, real time analytics can provide powerful differentiation for modern companies.

But, real time analytics platform capabilities can come at a cost if approached the wrong way, something today's cost-conscious engineering teams are forced to grapple with. Everybody likes the idea of real-time data analytics for their data analytics real time projects, but many carry the belief that the costs will outweigh the benefits. How can you use real-time data analytics while staying within your people, hardware, and cloud budgets?

This is your definitive guide to real-time analytics.

Read on to find everything you need to know about real-time analytics and how to cost-effectively build real-time analytics use cases for your business.

Table of Contents

- What is real-time analytics?

- How is real-time analytics different?

- Examples of real-time analytics

- Real-time analytics use cases

- Benefits of real-time analytics

- Challenges with real-time analytics

- Controlling costs with real-time analytics

- Real-time analytics tools and architecture

- Getting started with real-time analytics

What is real-time analytics?

Real-time analytics means spanning the entire data analytics journey, from capture to consumption, in seconds or less. Its function is to ingest streaming data, transform and enrich it, and expose it to user-facing applications or real-time data visualizations. It's a game-changer for modern data applications.

Unlike traditional data analytics, which utilizes batch data ingestion and processing techniques to serve business intelligence (BI) use cases, real-time analytics uses real-time data ingestion and processing to serve operational intelligence and user-facing analytics.

The five facets of real-time analytics

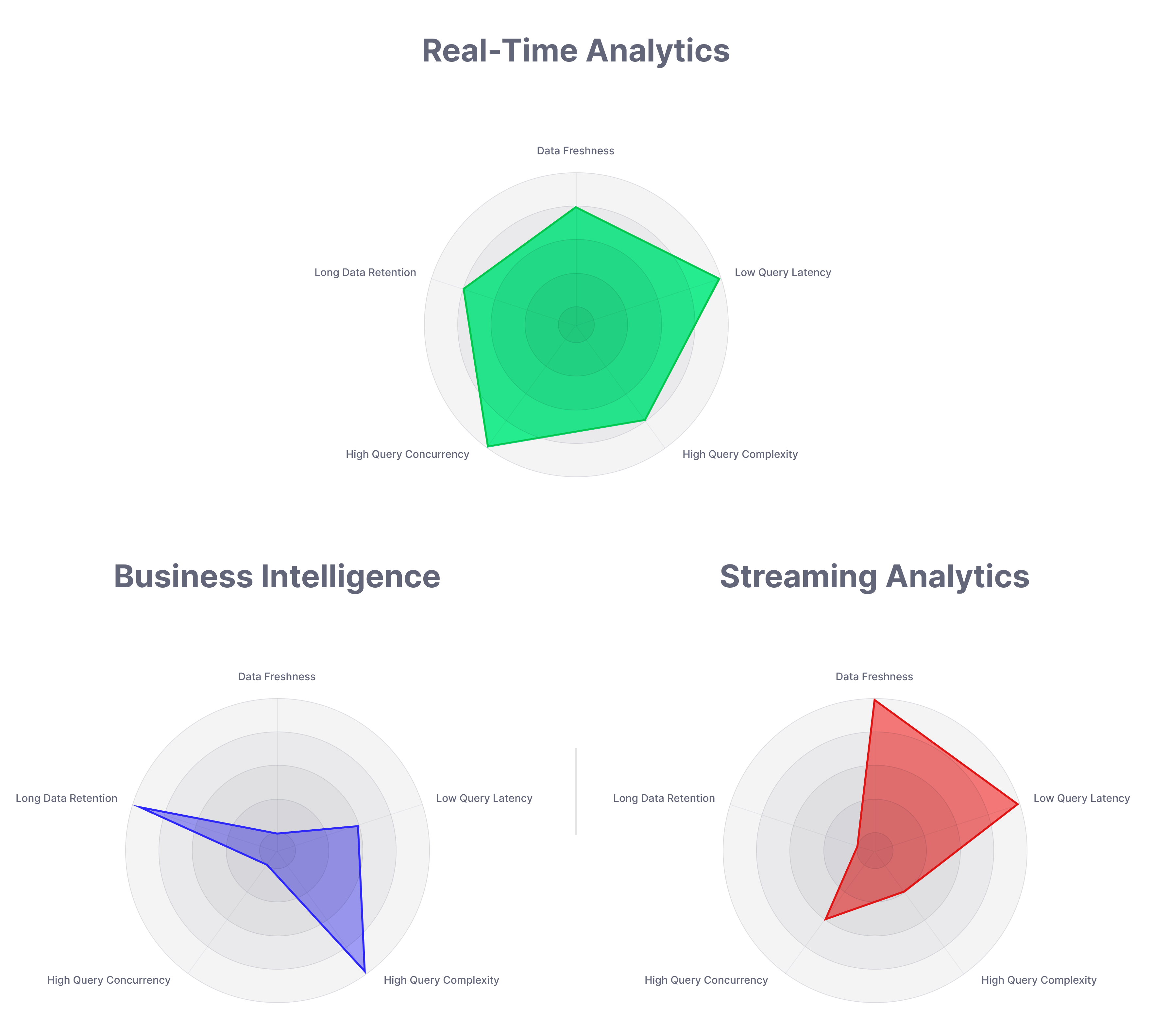

Real-time analytics can be further understood by its five core facets, namely:

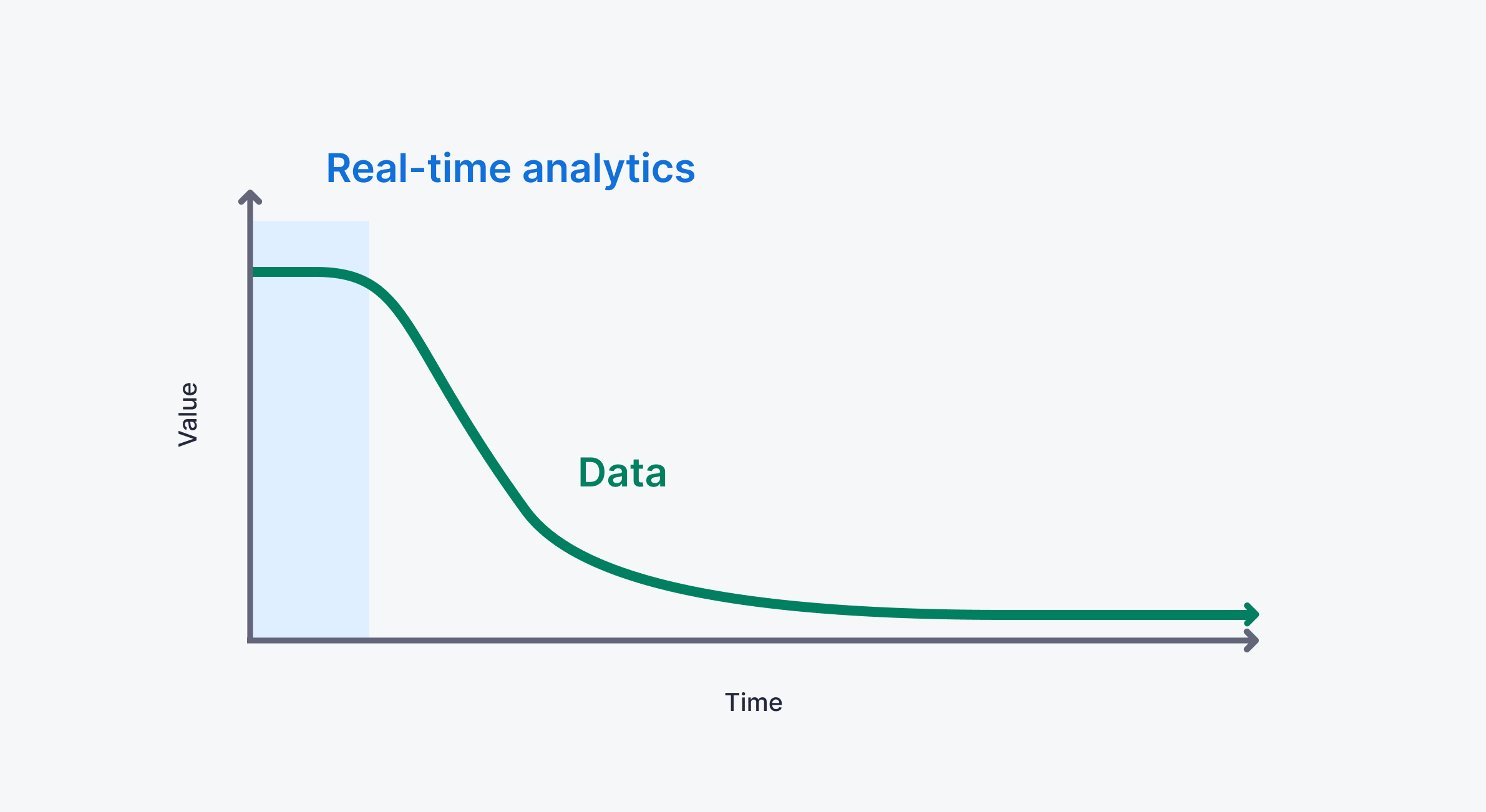

- Data Freshness. Data is most valuable right after it is generated. Data freshness describes the age of data relative to the time it was created. For you SQL junkies, it's `SELECT now() - timestamp AS freshness`. Real-time analytics systems capture data at peak freshness, that is, as soon as it is generated.

- Low Query Latency. Because real-time analytics is often used to power user-facing data applications, low query latency is a must. As a general rule of thumb, real-time analytics queries should respond in 50 milliseconds or less to avoid degrading the user experience.

- High Query Complexity. Real-time analytics makes complex queries over large data sets, involving filters, aggregates, joins, and other complex data analytics. Regardless, real-time analytics must handle these complexities and maintain low latency.

- Query Concurrency. Real-time analytics is designed to be accessed by many different end users, concurrently. Real-time analytics must support thousands or even millions of concurrent requests.

- Long Data Retention. Real-time analytics retains historical data for comparison and enrichment, so it must be optimized to access data captured over long periods. That said, real-time analytics tends to prefer minimizing raw data storage where possible, preferring instead to store long histories of aggregated data.

How is real-time analytics different?

You may be wondering, "How is real-time analytics different from traditional data analytics?" Real-time analytics differs from other approaches to data analytics, including business intelligence and streaming analytics.

Real-Time Analytics vs. Batch Analytics

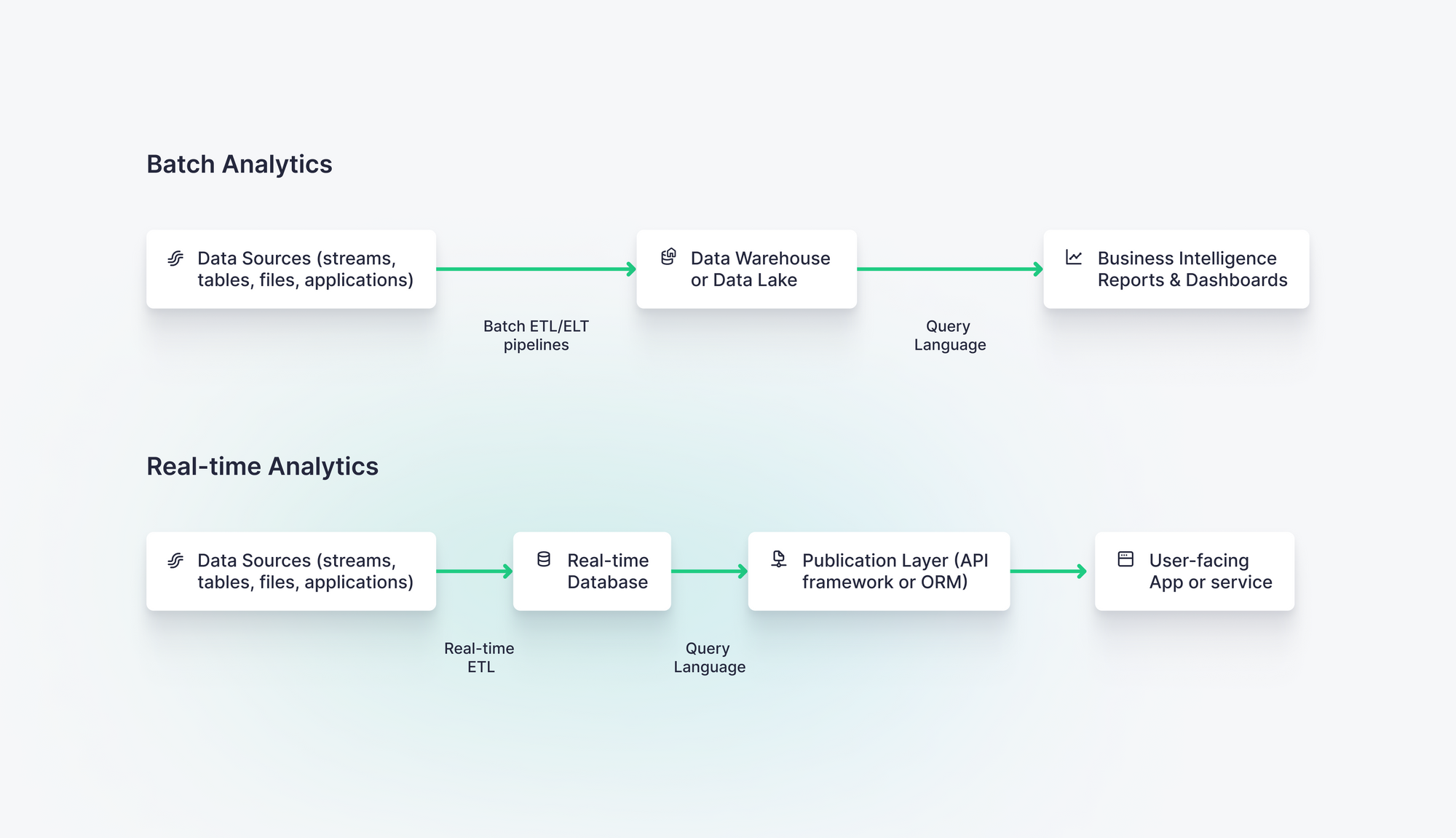

Batch analytics is a traditional form of data analytics often used for business intelligence (BI). It typically involves batch data processing techniques like extract, transform, load (ETL) or extract, load, transform (ELT) to capture data from source data systems, place that data in a cloud data warehouse, and query that data using SQL and data visualization tools designed for BI.

Batch data pipelines have proven tremendously useful for both business intelligence and data science disciplines. They can be used to generate traditional data analytics views and also train machine learning models that need to crunch and re-crunch large amounts of data over time.

Batch data processing leverages technical approaches - most notably data warehousing - based on its functional requirement to inform long-term business decision-making, most often at the executive and management levels.

Real-time data analytics, on the other hand, helps with the tangible, day-to-day, hour-to-hour, and minute-to-minute decisions that materially impact how a business operates. Where batch focuses on measuring the past to predict or inform the future, real-time data analytics focuses on the present. It answers questions like “Do we need to order or redistribute stock today?” or “Which offer should we show this customer right now?”

Unlike batch analytics, real-time analytics depends on real-time data ingestion, capturing data into analytics systems using streaming platforms and event-driven architectures. It leverages real-time data processing, incrementally updating analytics immediately as new data is generated rather than waiting on scheduled ETL processes.

Batch analytics is most commonly used for business intelligence, whereas real-time analytics is most commonly used for customer-facing data

Functionally, real-time data analytics is increasingly being utilized to automate decision-making or processes within applications and services, as opposed to just populating data visualizations.

Because of this, real-time analytics utilizes a fundamentally different architectural approach and different tooling compared to batch analytics.

Real-Time Analytics vs Streaming Analytics

Sometimes real-time data analytics is confused with streaming analytics. There are several streaming analytics products available today. They work great for some streaming use cases, but they all fall short when handling the high-concurrency, low-latency, and long data retention demands of real-time applications.

Streaming analytics systems don't leverage a full OLAP database that enables queries over arbitrary time spans (vs. window functions), advanced joins for complex use cases, managed materialized views for rollups, and many other real-time analytics requirements.

Streaming analytics answers questions about a particular thing at a particular moment. Questions like “Is this thing A or B?” or “Does this piece of data have A in it?” as data streams through. Streaming data analytics allows you to ask simple questions about a few things very close together in time. It can offer very low latency, but it comes with a catch: it has limited “memory.”

Real-time data analytics, in contrast, has a long memory. It very quickly ingests data and retains historical data to answer questions about current data in the context of historical events. Unlike streaming analytics, which uses stream processing engines for transformations, real-time analytics leverages a full online analytical processing (OLAP) engine that can handle complex analytics over unbounded time windows.

In addition, real-time analytics often supports "pull-based" user-facing APIs with variable demand, whereas streaming analytics is often used to sink analytical transformations into a data warehouse or in-memory cache.

Examples of real-time analytics

When you think about data analytics, you probably picture some dashboard with a combination of bar charts, line charts, and pie charts.

While real-time analytics can certainly be applied to building real-time data visualizations, the possibilities that real-time analytics enables extend beyond just dashboarding.

Below are real customer examples of real-time analytics in action:

Real-time marketing optimizations in sports betting and gaming

Real-time analytics can be used to optimize marketing campaigns in real-time. For example, FanDuel is North America's largest online sports betting platform.

FanDuel uses real-time analytics to build and monitor optimized marketing campaigns in real-time. In addition, FanDuel is able to personalize user betting journeys, identify fraud and problem gambling in real time, and provide VIP customer service, all thanks to real-time analytics.

Real-time personalization in the travel industry

Real-time analytics can be applied to real-time personalization use cases. For example, The Hotels Network is an online booking platform that hoteliers use to place personalized booking offers in front of their customers based on real-time data.

The Hotels Network utilizes a real-time analytics platform to capture user browsing behavior as clickstream events as soon as they are generated, process hundreds of millions of real-time data points every day, and expose personalized data recommendations on their customers' websites as the user is browsing. In addition, customers of The Hotels Network can use real-time data to benchmark their booking performance against their competitors.

By dynamically updating a user's booking experience and offering real-time insights into competitive behavior using real-time analytics, The Hotels Network dramatically improves conversion rates for its customers.

Real-time, personalized news feeds

Real-time analytics can be used to create up-to-date content feeds based on user preferences. For example, companies like daily.dev use real-time analytics to create personalized news feeds for their users.

The real-time analytics platform captures user content interaction events in real-time and creates a real-time API that prioritizes content based on real-time user preferences. The result is a new, highly personalized news feed that updates every time it's refreshed.

Real-time web analytics for product builders

Real-time analytics can be used to build user-facing analytics dashboards that update in real-time. For example, Vercel is a platform for web developers to build, preview, and deploy web applications.

Vercel uses real-time analytics to show its users how their users are interacting with their web applications in real-time. Vercel's customers no longer have to wait 24 hours or more for data to begin populating in their analytics dashboards. Using real-time analytics, Vercel has increased customer adoption by analyzing petabytes of data every day in real time.

User-facing analytics in human resources

Real-time analytics can be used to show customers how they are interacting with SaaS products. For example, Factorial is a modern HR SaaS that is changing the way employees interact with their employers.

Factorial uses real-time analytics to build user-facing dashboards that show employees how to optimize the way the work, and give employers tools for better managing employee satisfaction and productivity.

All of this is powered by real-time analytics that analyzes user interactions with their online HR platform in real-time.

Real-time analytics use cases

As the above examples indicate, real-time analytics can be used for more than just dashboarding, instead being applied in many ways to improve customer experiences, optimize user-facing features based on real-time data, and unlock new business opportunities. Below is a long list of use cases for which real-time analytics is an ideal approach:

- Sports betting and gaming. Real-time analytics can help sports betting and gaming companies reduce time-to-first-bet, improve the customer experience through real-time personalization, maintain leaderboards in real-time based on in-game events, segment users in real-time for personalized marketing campaigns, and reduce the risk of fraud. (learn more about Sports Betting and Gaming Solutions)

- Inventory and stock management. Real-time analytics can help online retailers optimize their fulfillment centers and retail location inventory to reduce costs, provide a modern customer experience with real-time inventory and availability, improve operational efficiency by streamlining supply chains, and make better decisions using real-time data and insights into trends and customer behavior. (learn more about Smart Inventory Management)

- Website analytics. Real-time analytics can help website owners monitor user behavior as it happens, enabling them to make data-driven decisions that can improve user engagement and conversion rates even during active sessions. (related: How an eCommerce giant replaced Google Analytics)

- Personalization. Real-time analytics can help companies personalize user experiences as a customer is using a product or service, based on up-to-the-second user behavior, preferences, history, cohort analysis, and much more. (learn more about Real-time Personalization)

- User-facing analytics. Real-time analytics can give product owners the power to inform their end users with up-to-date and relevant metrics related to product usage and adoption, which can help users understand the value of the product and reduce churn. (learn more about User-facing Analytics)

- Operational intelligence. Real-time analytics can help companies monitor and optimize operational performance, enabling them to detect and remediate issues the moment they happen and improve overall efficiency. (learn more about Operational Intelligence)

- Anomaly detection and alerts. Real-time analytics can be used to detect real-time anomalies, for example from Internet of Things (IoT) sensors, and not only trigger alerts but build self-healing infrastructure. (learn more about Anomaly Detection & Alerts)

- Software log analytics. Real-time analytics can help software developers build solutions over application logs, enabling them to increase their development velocity, identify issues, and remediate them before they impact end users. (Related: Cutting CI pipeline execution time by 60%)

- Trend forecasting. Across broad industry categories, real-time data analytics can be used as predictive analytics to forecast trends based on the most recent data available. (Related: Using SQL and Python to create alerts from predictions)

- Usage-based pricing. Real-time analytics can help companies implement usage-based pricing models, enabling them to offer personalized pricing based on real-time usage data. (learn more about Usage-Based Pricing)

- Logistics management. Real-time analytics can help logistics companies optimize routing and scheduling, enabling them to improve delivery times and reduce costs.

- Security information and event management. Real-time analytics can help companies detect security threats and trigger automated responses, enabling them to mitigate risk and protect sensitive data.

- Financial services. Real-time analytics can be used for real-time fraud detection. Fraudulent transactions can be compared to historical trends so that such transactions can be stopped before they go through.

- Customer 360s. Real-time analytics can help companies build a comprehensive and up-to-date view of their customers, enabling them to offer personalized experiences and improve customer satisfaction.

- Artificial intelligence and machine learning. Real-time analytics can power online feature stores to help AI and ML models learn from accurate, fresh data, enabling them to improve accuracy and predictive performance in data science projects over time.

Benefits of real-time analytics

Some benefits of adopting real-time analytics include:

- Faster decision-making

- Automated, intelligent software

- Improved customer experiences

- Better cost and process efficiencies

- Competitive differentiation

Real-time analytics is becoming increasingly popular thanks to the many opportunities it unlocks for businesses that want to leverage real-time data. Here's how real-time analytics is increasing the value of companies' data.

Faster decision-making

Real-time data analytics answers complex questions within milliseconds, a feat that batch processing cannot achieve. In doing so, it allows for time-sensitive reactions and interventions (for example, in healthcare, manufacturing, or retail settings) made by humans who can interpret data more quickly to spur faster decisions.

Automated, intelligent software

Real-time data doesn’t just boost human decision making, but increasingly enables automated decisions within software. Software applications and services can interact with the outputs of real-time analytics systems to automate functions based on real-time metrics.

Improved customer experiences

Real-time data can provide insights into customer behavior, preferences, and sentiment as they use products and services. Applications can then provide interactive tools that respond to customer usage, share information with customers through transparent in-product visualizations, or personalize their product experience within an active session.

Better cost and process efficiencies

Everybody thinks real-time analytics is more costly than batch analytics. In fact, real-time data can be used to optimize business processes, reducing costs and improving efficiency. This could include identifying and acting on cost-saving opportunities, such as reducing energy consumption in manufacturing processes or optimizing fleet routes.

Real-time data analytics can also help identify performance bottlenecks or identify testing problems early, enabling developers to quickly optimize application performance both before and after moving systems to production.

Competitive differentiation

Real-time data can create a competitive moat for businesses that build it well. Businesses that integrate real-time data into their products generally provide better, more differentiated customer experiences.

In addition, real-time data provides a two-pronged speed advantage: It not only enables the development of differentiating features but also provides faster feedback loops based on customer needs. Companies that use real-time data can get new features to market more quickly and outpace competitors.

Challenges with real-time analytics

Building a real-time analytics system can feel daunting. In particular, seven challenges arise when making the shift from batch to real-time:

- Using the right tools for the job

- Adopting a real-time mindset

- Managing cross-team collaboration

- Handling scale

- Enabling real-time observability

- Evolving data projects in production

- Controlling costs

Using the right tools for real-time data

Real-time data analytics demands a different toolset than traditional batch data pipelines, business intelligence, or basic app development. Instead of data warehouses, batch ETLs, DAGs, and OLTP or document-store app databases, engineers building real-time analytics need to use streaming technologies, real-time databases, and API layers effectively.

And because speed is so critical in real-time analytics, engineers must bridge these components with minimal latency, or turn to a real-time data platform that integrates each function.

Either way, developers and data teams must adopt new tools when building real-time applications.

Adopting a real-time mindset

Of course, using new tools won’t help if you’re stuck in a batch mindset.

Batch processing (and batch tooling like dbt or Airflow) often involves regularly running the same query to constantly recalculate certain results based on new data. In effect, much of the same data gets processed many times.

But if you need to have access to those results in real-time (or over fresh data), that way of thinking does not help you.

A real-time mindset focuses on minimizing data processing - optimizing to process raw data only once - to both improve performance and keep costs low.

To minimize query latencies and process data at scale while it’s still fresh, you have to:

- Filter out and avoid processing anything that’s not essential to your use case, to keep things light and fast.

- Consider materializing or enriching data at ingestion time rather than query time, so that you make your downstream queries more performant (and avoid constantly scanning the same data).

- Keep an optimization mindset at all times: the less data you have to scan or process, the lower the latency you’ll be able to provide within your applications, and the more queries that you’ll be able to push through each CPU core.

Handling scale

Real-time data analytics combines the scale of “Big Data” with the performance and uptime requirements of user-facing applications.

Batch processes are less prone to the negative effects caused by spikes in data generation. Like a dam, they can control the flow of data. But real-time applications must be able to handle and process ingestion peaks in real-time. Consider an eCommerce store on Black Friday. To support use cases like real-time personalization during traffic surges, your real-time infrastructure must respond to and scale with massive data spikes.

To succeed with real-time analytics, engineers need to be able to manage and maintain data projects at scale and in production. This can be difficult without adding additional tooling and or hiring new resources.

Enabling real-time observability

Failures in real-time infrastructure happen fast. Detecting and remediating scenarios that can negatively impact production requires real-time observability that can keep up with real-time infrastructure.

If you’re building real-time data analytics in applications, it’s not enough for those applications to serve low-latency APIs. Your observability and alerting tools need to have similarly fast response times so that you can detect user-affecting problems quickly.

Evolving real-time data projects in production

In a batch context, schema migrations and failed data pipelines might only affect internal consumers, and the effects appear more slowly. But in real-time applications, these changes will have immediate and often external ramifications.

For example, changing a schema in a dbt pipeline that runs every hour gives you exactly one hour to deploy and test new changes without affecting any business process.

Changes in real-time infrastructure, on the other hand, only offer milliseconds before downstream processes are affected. In real-time applications, schema evolutions and business logic changes are more akin to changes in software backend applications, where an introduced bug will have an immediate and user-facing effect.

In other words, changing a schema while you are writing and querying over 200,000 records per second is challenging, so a good migration strategy and tooling around deployments is critical.

Managing cross-team collaboration

Up until recently, data engineers and software developers often focused on different objectives. Data engineers and data platform teams built infrastructure and pipelines to serve business intelligence needs. Software developers and product teams designed and built applications for external users.

With real-time data analytics, these two functions must come together. Companies pursuing real-time analytics must lean on data engineers and platform teams to build real-time infrastructure or APIs that developers can easily discover and build with. Developers must understand how to use these APIs to build real-time data applications.

As you and your data grow, managing this collaboration becomes critical. You need systems and workflows in place that let developers and engineers “flow” in their work while still enabling effective cross-team work.

This shift in workflows may feel unfamiliar and slow. Still, data engineers and software developers will have to work closely to succeed with real-time data analytics.

Controlling the costs of real-time analytics

Many are concerned that the cost of real-time data analytics will outweigh the benefits. Because of the challenges that real-time analytics poses, controlling costs with real-time analytics can be difficult. This is especially true for teams making the transition from batch data processing to real-time processing.

Real-time analytics demands new tools, new ways of working, increased collaboration, added scale, and complex deployment models. These factors introduce new dependencies and requirements that, depending on your design, can create serious cost sinks.

If you’re not careful, added costs can appear anywhere and in many ways: more infrastructure and maintenance, more SREs, slower time to market, and added tooling.

There is always a cost associated with change, but real-time analytics doesn't have to be more expensive than traditional approaches. In fact, if you do it right, you can achieve an impressive ROI with real-time analytics.

Here are a few tips for controlling costs when building real-time analytics:

- Use the right tools for real-time analytics. It can be tempting to try to leverage existing infrastructure, especially cloud data warehouses, for real-time analytics. But this is a classic "square peg, round hole" situation that will prove costly. Data warehouses are not optimized for real-time analytics, and attempting to make them so generally results in very high cloud compute costs.

Instead, offload real-time analytics workloads to real-time data platforms optimized for real-time analytics. These platforms are specifically designed and specified for handling real-time analytics at scale. - Follow SQL best practices. The popularity of SQL and the rise of Big Data have made it possible for people with a modicum of data experience to make ad hoc queries to a company's data warehouse for answers to pressing questions.

In theory, the consequences of unoptimized queries are mitigated when the query is only being run infrequently.

With real-time analytics, however, SQL-defined data transformations will run continually, perhaps millions of times per day. Unoptimized SQL queries will balloon costs with real-time analytics if only because those queries will be run considerably more often. Follow these SQL best practices to make sure your queries are optimized for real-time analytics. - Consider managed infrastructure. Real-time analytics carries a learning curve. It requires new skills and new ways of thinking.

Competent, qualified data professionals who can manage real-time data at scale are scarce in today's market. Rather than hire expensive resources to try to manage new tools and infrastructure, consider using managed, well-integrated infrastructure that can support your real-time analytics use cases from end to end. - Choose modern tools. If you're still trying to use Hadoop or Snowflake for real-time analytics, you're behind. New technologies are emerging that make real-time analytics easier to develop. Choose real-time databases, real-time data platforms, and other modern tools that increase your chance of success with real-time analytics.

Real-time analytics tools and architecture

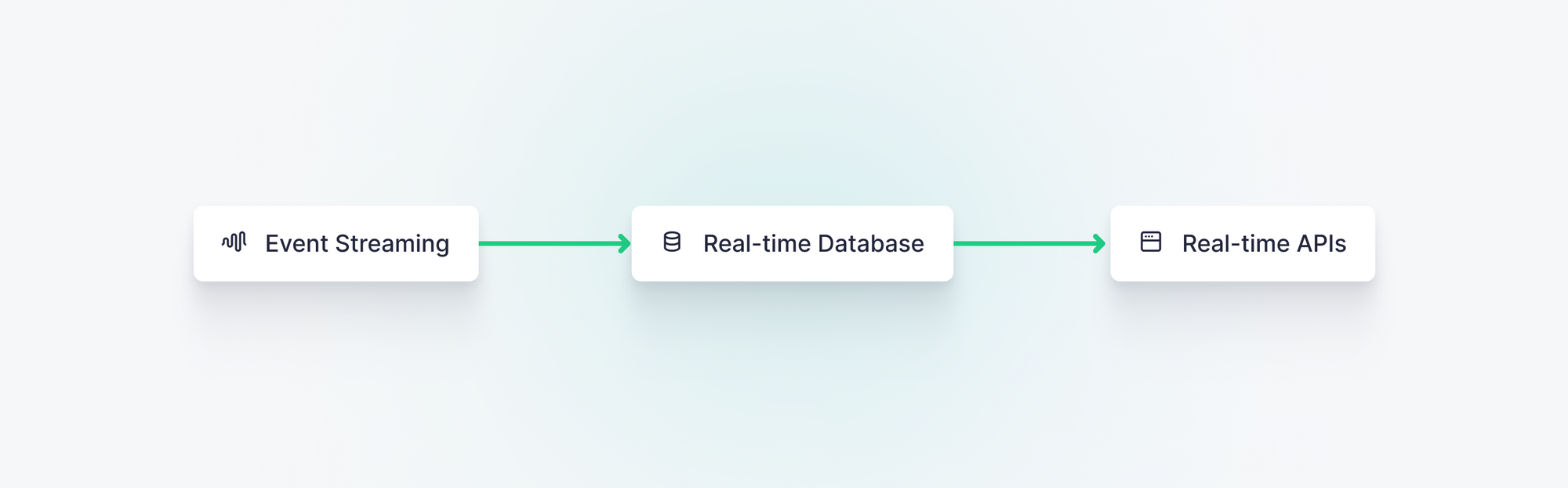

Real-time data architectures consist of three core components:

- Data streaming technologies

- Real-time databases

- Real-time APIs

Combined, these core components can be integrated and augmented to build many different variations of scalable real-time data architectures.

Below you'll find lists of common tools and technologies that can be used to build real-time analytics:

Data streaming technologies

Since real-time data analytics requires high-frequency ingestion of events data, you’ll need a reliable way to capture streams of data generated by applications and other systems.

The most commonly used data streaming technology is Apache Kafka, an open-source distributed event streaming platform used by many. Within the Kafka ecosystem exist many “flavors” of Kafka offered as a service or with alternative client-side libraries. Notable options here include:

- Confluent

- Redpanda

- Upstash

- Amazon MSK

- Aiven

- Self-hosted

While Kafka and its offshoots are broadly favored in this space, a few alternatives have been widely adopted, for example:

- Google Pub/Sub

- Amazon Kinesis

- RabbitMQ

- Tinybird Events API

Regardless of which streaming platform you choose, the ability to capture streaming data is fundamental to the real-time data analytics stack.

Real-time databases

Real-time analytics architectures tend to include a columnar real-time database that can store incoming and historical events data and make it available for low-latency querying.

Real-time databases should offer high throughput on inserts, columnar storage for compression and low-latency reads, and functional integrations with publication layers.

Critically, most standard transactional and document-store databases are not suitable for real-time analytics, so a column-oriented OLAP should be the database of choice.

The following databases have emerged as the most popular open-source databases for real-time analytics:

- ClickHouse®

- Druid

- Pinot

Real-time databases are built for high-frequency inserts, complex analytics over large amounts of data, and low-latency querying.

Real-time API layers

To make use of data that has been stored in real-time databases, developers need a publication layer to expose queries made on that database to external applications, services, or real-time data visualizations. This often takes the form of an ORM or an API framework.

One particular challenge with building real-time data architectures is that analytical application databases tend to have less robust ecosystems than their OLTP counterparts, so there are often fewer options to choose from here, and those that exist tend to be less mature and with smaller communities.

So, publication layers for real-time data analytics generally require that you build your own custom backend to meet the needs of your application. This means building yet another HTTP API using tools like:

- FastAPI (Python)

- Express.js (JavaScript)

- Hyper (Rust)

- Gin (Go)

Integrating real-time analytics tools

Each of the 3 core components - data streaming technology, real-time database, and API layer - matters when building the ideal real-time data architecture, and while such an architecture can be constructed piecemeal, beware of technical handoffs that inevitably introduce latency and complexity.

Recently, real-time data platforms such as Tinybird have combined these core components into a unified and integrated technology stack, providing in a managed service the critical functionality for building and maintaining real-time analytics.

Tinybird is the leading real-time analytics platform

The next wave of real-time applications and systems require extraordinary processing speed and storage and have been historically difficult and expensive to build.

But that changes with Tinybird.

Tinybird is the industry-leading real-time analytics platform. With Tinybird, software developers can harness the power of real-time data to quickly and cost-effectively build real-time data analytics and the applications they power.

Here's how Tinybird handles the performance requirements of real-time analytics:

- High-frequency ingestion of events and dimensions from multiple data sources. Tinybird provides data streaming through its HTTP streaming endpoint as well as native integrations with streaming platforms like Kafka and Confluent. In addition, Tinybird provides a real-time database that can handle writes at hundreds of megabytes per second magnitude and with very low latency. Tinybird's underlying database technology is capable of insert throughput performance of 50-200 MB/s and up to 1M+ rows per second in common use cases, so it fits the bill for real-time analytics.

- Real-time data processing and transformation. Tinybird provides an intuitive SQL engine to define complex transformations that aggregate, filter, and enrich data as it streams in. In addition, Tinybird supports real-time materialized views that transform data incrementally and persist transformations into storage for rapid querying.

- Low-latency, high-concurrency publication layer. Finally, Tinybird makes it possible to instantly publish SQL transformations as low-latency, scalable APIs that can be used to build real-time dashboards and user-facing real-time analytics. Tinybird APIs can be easily optimized to respond in milliseconds for almost any use case.

Beyond table-stakes performance metrics, Tinybird makes it simple to rapidly develop, ship, and maintain real-time data analytics at scale.

Use Tinybird to ingest data from multiple sources at millions of events per second, query and shape that data using the 100% pure SQL you already know and love, and publish your queries as low-latency, high-concurrency REST APIs to consume in your applications.

Tinybird is a force multiplier for data teams and developers building real-time data analytics. Here are the factors that influence Tinybird’s position as the top real-time analytics platform.

- Performance. Performance is critical when it comes to real-time data platforms. Tinybird is a serverless platform built on top of ClickHouse®, the world’s fastest OLAP database for real-time analytics. Tinybird can handle massive volumes of data at streaming scale, supports a wide array of SQL joins, offers materialized views and the performance advantages they offer, and still maintains API latencies under a second for even very complex queries and use cases.

- Developer experience. Developer experience is critical when building analytics into user experiences. Developers need to be able to build quickly, fail fast, and safely maintain production systems. Tinybird’s developer experience is unparalleled amongst real-time data platforms. Tinybird offers a straightforward, flexible, and familiar experience, with both UI and CLI workflows, SQL-based queries, and familiar CI/CD workflows when working with OLAP databases. Things like schema migrations and database branching can all be managed in code with version control. These things combined reduce the learning curve for developers, streamline the development process, and enhance the overall developer experience.

- Faster speed to market. Of course, developer comfort is only a part of the story. Perhaps more critically, a better developer experience shortens the time to market. Tinybird empowers developers to push enterprise-grade systems into production more quickly and with more confidence. Through a combination of intuitive interfaces, built-in observability, and enterprise support, Tinybird enables developers to ship production code much faster than alternatives - which shortens the time between scoping and monetization.

- Fewer moving parts. Simplicity is key when it comes to real-time analytics Whereas other real-time analytics solutions require piecemeal integrations to bridge ingestion, querying, and publication layers, Tinybird integrates the entire real-time data stack into a single platform. This eliminates the need for multiple tools and components and provides a simple, integrated, and performant approach to help you reduce costs and improve efficiency.

- Works well with everything you already have. Interoperability is also important when evaluating a real-time data platform. Tinybird easily integrates with existing tools and systems with open APIs, first-party data connectors, and plug-ins that enable you to integrate with popular tools such as databases, data warehouses, streaming platforms, data lakes, observability platforms, and business intelligence tools.

- Managed scale. Tinybird allows product teams to focus on product development and release cycles, rather than scaling infrastructure. This not only improves product velocity, it also minimizes resource constraints. With Tinybird, engineering teams can hire fewer SREs that would otherwise be needed to manage, monitor, and scale databases and streaming infrastructure.

"Tinybird is a force multiplier. It unlocks so many possibilities without having to hire anyone or do much additional work. It’s faster than anything we could do on our own. And, it just works."

Senior Data Engineer, Top 10 Sports Betting and Gaming Company

How Tinybird reduces the cost of real-time analytics

You’re under pressure to reduce costs but maintain the same, if not greater, level of service. Fortunately, a real-time data platform like Tinybird can help you capture new value at a fraction of the cost.

Here are a few ways that Tinybird enables cost-effective development:

- Deliver impact across your business. Tinybird can ingest data from multiple sources and replace many existing solutions, from web analytics to inventory management to website personalization and much, much more.

- Developer productivity where it counts. Tinybird has a beautiful and intuitive developer experience. What used to take weeks can be accomplished in minutes. What used to take entire teams of engineers to build, debug, and maintain can now be accomplished by one industrious individual. Efficiency is a superpower, and Tinybird delivers.

- Reduced infrastructure and moving parts. Tinybird combines ingestion, data processing, querying, and publishing, enabling more use cases with less infrastructure to manage and fewer human resources required to manage said infrastructure. This means fewer hand-offs between teams and systems, faster time-to-market, and reduced costs.

- Automate your insights. React faster to opportunities and problems. No more waiting for those daily reports, you’ll be able to know in real time how your business is behaving and automate/operationalize your responses to it.

"Tinybird provides exactly the set of tools we need to very quickly deliver new user-facing data products over the data investments we’ve already made. When we switched to Tinybird, we ran a PoC and shipped our first feature to production in a month. Since then, we’ve shipped 12 new user-facing features in just a few months. There’s no way we could have done this without Tinybird."

Marc Gonzalez, Director of Data, Factorial HR

Getting started with real-time analytics

Who Uses Real-Time Analytics in an Organization?

Executive and Finance Leaders

Executives and finance teams rely on real-time analytics to monitor revenue, profitability, and risk as conditions change. They no longer wait for end-of-month reports. Instead, they track live KPIs and adjust budgets or strategies immediately. In financial services, leaders depend on real-time credit risk and exposure dashboards to act quickly and reduce uncertainty.

Operations and Supply Chain Managers

Operations and supply chain managers follow streaming signals from machines, sensors, and shipments. They use this data to detect bottlenecks, delays, and quality issues as soon as they emerge. In manufacturing, logistics, or energy, real-time analytics helps reduce downtime, lower waste, and maintain service reliability.

IT, Security, and DevOps Teams

IT and DevOps teams use real-time insights to keep systems healthy and performant. They analyze logs, metrics, and traces as they arrive to identify issues tied to recent deployments or configuration changes. Security teams build on streaming event data to spot suspicious behavior and automate responses before an incident escalates.

Marketing, Sales, and Product Teams

Marketing, sales, and product teams react to user behavior the moment it happens. Marketers adjust audiences, bids, and creative based on live campaign results instead of waiting for delayed reports. Product and sales teams track real-time usage and engagement to trigger timely outreach or launch in-app experiments.

Customer Service and Frontline Employees

Customer service agents and frontline staff benefit from up-to-the-second context about each user. Agents see a customer's recent actions, sentiment, and journey, which shortens resolution time. Retail or on-site teams use fresh data on stock, demand, and preferences to deliver better experiences on the spot.

Related Concepts and Complementary Capabilities

Real-Time Data Streaming and Event-Driven Architectures

Real-time analytics is strongly connected to data streaming and event-driven architectures. Continuous streams of clicks, transactions, or sensor events are processed the moment they occur. This enables low-latency pipelines essential for personalization, dynamic pricing, operational monitoring, and other time-sensitive features.

Distributed Processing Engines and SQL Workloads

Many platforms rely on distributed processing engines that scale horizontally across large clusters. These engines support streaming workloads, historical queries, and machine learning in a unified environment. SQL layers on top let teams express complex logic with familiar syntax while the platform handles parallel execution and optimization.

Real-Time Data Visualization

Real-time visualizations turn continuous data into live dashboards and interactive charts. Users can immediately spot anomalies, trends, or changes in performance. These same visualization components can be embedded directly into products so customers interact with live data during their workflows.

Predictive Analytics and Next Best Actions

When real-time analytics is paired with predictive models, organizations can anticipate what will happen next. Models built on current and historical signals can predict churn, fraud, equipment failures, or inventory shortages. Evaluated continuously, these predictions enable next best actions such as approvals, interventions, or personalized offers delivered instantly.

Empowering End Users With Embedded and Self-Service Analytics

A major trend is giving end users direct access to live insights. Embedded analytics brings real-time metrics into the software products customers already use every day. Self-service interfaces allow business users to explore and filter live data on their own, shrinking the gap between insight and action.

So how do you begin to build real-time data analytics into your next development project? As this guide has demonstrated, there are 3 core steps to building real-time data analytics:

- Ingesting real-time data at streaming scale

- Querying the data to build analytics metrics

- Publishing the metrics to integrate into your apps

Below you’ll find practical steps on ingesting data from streaming platforms (and other sources), querying that data with SQL, and publishing low-latency, high-concurrency APIs for consumption within your applications.

If you’re new to Tinybird, you can try it out by signing up for a free-forever Build Plan, with no credit card required, no time restrictions, and generous free limits.

Ingesting real-time data into Tinybird

Tinybird supports ingestion from multiple sources, including streaming platforms, files, databases, and data warehouses. Here’s how to code ingestion from various sources using Tinybird.

Ingest real-time data from Kafka

Tinybird enables real-time data ingestion from Kafka using the native Kafka connector. You can use the Tinybird UI to set up your Kafka connection, choose your topics, and define your ingestion schema in a few clicks. Or, you can use the Tinybird CLI to develop Kafka ingestion pipelines from your terminal.

To learn more about building real-time data analytics on top of Kafka data, check out these resources:

- Docs - Tinybird Kafka Connector

- Blog Post - From Kafka streams to data products

- Live Coding Session - Build low-latency analytics APIs on top of Kafka data

Note that this applies to any Kafka-compatible platform such as Confluent, Redpanda, Upstash, Aiven, or Amazon MSK.

Ingest data from object storage like Amazon S3

Tinybird makes it easy to build real-time analytics over files stored in Amazon S3. With Tinybird, you can synchronized data from CSV, NDJSON, or Parquet files stored in Amazon S3, develop analytics with SQL, and publish your metrics as low-latency, scalable APIs.

Connecting to S3 is simple with Tinybird's S3 Connector. Just set your connection, select your bucket, define your sync rules, and start bringing S3 data directly into Tinybird.

Check out these resources below to learn how to build real-time analytics on top of object storage:

Ingest data from CSV, NDJSON, and Parquet files

Tinybird enables data ingestion from CSV, NDJSON, and Parquet files, either locally on your machine or remotely in cloud storage such as GCP or S3 buckets. While data stored in files is often not generated in real time, it can be beneficial as dimensional data to join with data ingested through streaming platforms. Tinybird has wide coverage of SQL joins to make this possible.

You can ingest real-time data from files using the Tinybird UI, using the CLI, or using the Data Sources API.

Here are some resources to learn how to ingest data from local or remote files:

- Docs - Ingest data from CSV files into Tinybird

- Docs - How to ingest NDJSON data into Tinybird

- Docs - The Tinybird Datasources API

- Blog Post - Querying large CSVs online with SQL

Ingest from your applications via HTTP

Perhaps the simplest way to capture real-time data into Tinybird is using the Events API, a simple HTTP endpoint that enables high-frequency ingestion of JSON records into Tinybird.

Because it’s just an HTTP endpoint, you can invoke the API from any application code. The Events API can handle ingestion at up to 1000 requests and 20+ MB per second, making it super scalable for most streaming use cases.

Check out the code snippets below for example usage in your favorite language.

For more info on building real-time data analytics on top of application data using the Events API, check out these resources:

For more info on building real-time data analytics on top of application data using the Events API, check out these resources:

- Docs - The Tinybird Events API

- Guide - Ingest data into Tinybird with an HTTP request

- Screencast - Stream data with the Tinybird Events API

Query and shape real-time data with SQL

Tinybird offers a delightful interface for building real-time analytics metrics using the SQL you know and love.

With Tinybird pipes you can chop up more complex queries into chained, composable nodes of SQL. This simplifies development flow and makes it easy to identify queries that impede performance or increase latency.

Tinybird pipes also include a robust templating language to extend your query logic beyond SQL and publish dynamic, parameterized endpoints from your queries.

Below are some example code snippets of SQL queries written in Tinybird pipes for simple real-time analytics use cases.

For more info on building real-time data analytics metrics with Tinybird pipes, check out these resources:

- Docs - What are Tinybird Pipes?

- Guide - Best practices for faster SQL queries

- Blog - To the limits of SQL… and beyond

Publish real-time data APIs

Tinybird shines in its publication layer. Whereas other real-time data platforms or technologies may still demand that you build a custom backend to support user-facing applications, Tinybird massively simplifies application development with instant REST API publication from SQL queries.

Every API published from a Tinybird pipe includes automatically generated, OpenAPI-compatible documentation, security through auth token management, and built-in observability dashboards and APIs to monitor endpoint performance and usage.

Furthermore, Tinybird APIs can be parameterized using a simple templating language. By utilizing the templating language in your SQL queries, you can build robust logic for dynamic API endpoints.

To learn more about how to build real-time data analytics APIs with Tinybird, check out these resources:

Start building with Tinybird

Ready to experience the industry-leading real-time data platform? Sign up to Tinybird for free (no time limit), or start building right away: